Classification and Regression Trees (CART)-Classifier

CART-Algorithms were first published by Leo Breiman in 1984. As the name suggests, this algorithm makes use of decision trees, a popular decision support tool in machine learning. By growing the tree, which means giving it more information to learn from in the form of basic if-else-decision rules, a model can be built up through piecewise approximation. The more branches the decision tree has, the better can the model fit its purpose.

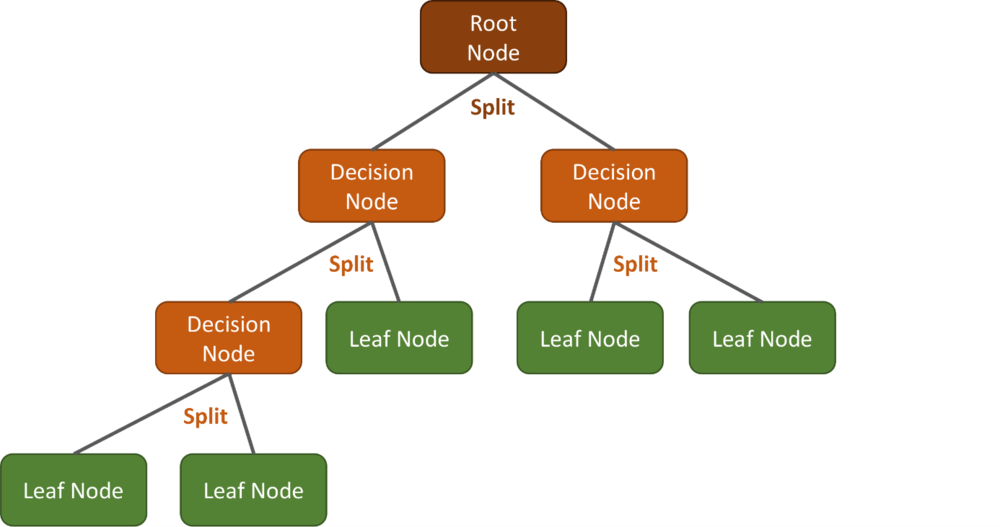

Basically, a decision tree is a rather simple structure that consists of three different kinds of elements: One Root Node, which is the starting point including all training samples, multiple Decision Nodes, where we split our data using simple if-else-decision rules, and multiple Terminal Nodes, where we eventually assign the classes for our classification purpose.

If we look at the example graph, it is easy to see that by adding more Decision Nodes, we can not only easily increase the complexity, but also the accuracy of our model.

Advantages of Decision Trees:

Compared to other classification algorithms, the concept is rather easy to understand.

The decision tree can be visualized to help understanding or interpreting it.

Can not only handle numeric, but also categorical data.

Disadvantages of Decision Trees

Prone to overfitting, which means creating extremely complex trees that fail to properly generalize the data.

Using only a simple decision tree is prone to variations; even small variations in the data can lead to a various different Decision Trees. This can bee avoided by using ensembles of Decision Trees, which we will also look at in the next chapter.

Depending on how the Decision Nodes are chosen, the data can be easily biased, which mean that certain classes dominate the Decision Tree.

Advancing Literature to dive deeper into the theoretical part:

https://blogs.fu-berlin.de/reseda/random-forest/

Liaw, A., Wiener, M. (2002): Classification and Regression by randomForest. Forest, 23.

Pal., M. (2003): Random Forest Classifier for Remote Sensing Classification. In: International Journal of Remote Sensing, Volume 26, Issue 1. Pages 217-222.